CVSS – Common Vulnerability Scoring System

In IT security the Common Vulnerability Scoring System (CVSS) is not only used to classify the degree of severity of software vulnerabilities. The CVSS framework is also used to characterise and individually assess these vulnerabilities.

Learn to understand the CVSS Common Vulnerability Scoring System and NIST messages. As a manufacturer (e.g. of medical devices), you should constantly keep an eye on these messages in order to be able to assess risks for your devices and manage them. This will help you to fulfil legal requirements.

1. How the Common Vulnerability Scoring System helps in the development of secure products

a) Assessing vulnerability individually

The vulnerability scores classify the type and severity of software vulnerabilities in IT security. They provide different parties (e.g. manufacturers, security researchers, notified bodies, authorities, penetration testers) with an individual definition of severity.

b) Providing input for risk analysis

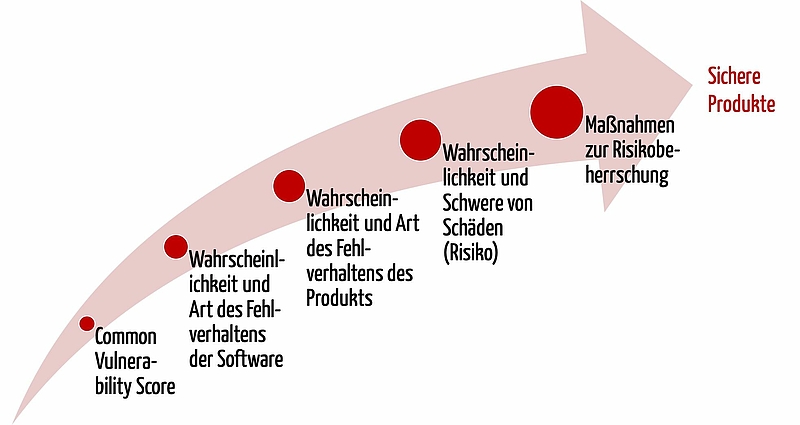

However, the scores do not (!) measure risks as defined by ISO 14971, which are based on software that can be compromised.

- Instead, the scores help to assess how and how probable it is that software will not behave according to specifications.

- In turn this enables an assessment of how and how probable it is that the product will not behave according to specifications.

- And subsequently it enables conclusions to be drawn as to how high the risks (probability and severity of damage) are for patients.

Consequently, the scores help us to take suitable measures and so develop safe products.

c) Communicating vulnerabilities (interoperability)

Vulnerabilities are not only characterised by their score, but also by other attributes:

- clear identification of the vulnerability

- affected product (e.g. operating system, library)

- affected version of the product

- problem type

- description of the vulnerability

- status

- date of the initial message

- date of the last revision

- references

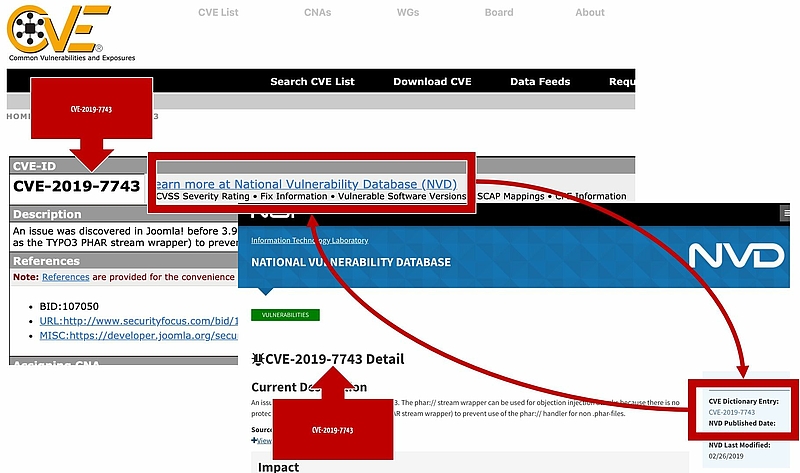

Clear numbering of the vulnerabilities is provided by the CVE ID. CVE stands for Common Vulnerabilities and Exposure. This numbering system is also used by the NIST’s National Vulnerability Database (NVD) (see Fig. 2).

The XML-based format, for example, serves as an exchange format as specified by the Common Vulnerability Reporting Framework (CVRF). The NIST uses another format and saves other attributes. This complicates interoperability somewhat. Luckily, both ‘databases’ (CVE and NIST) refer to each other in the case of the individual entries (see Fig. 2).

2. Which metrics are included and how the scores are calculated

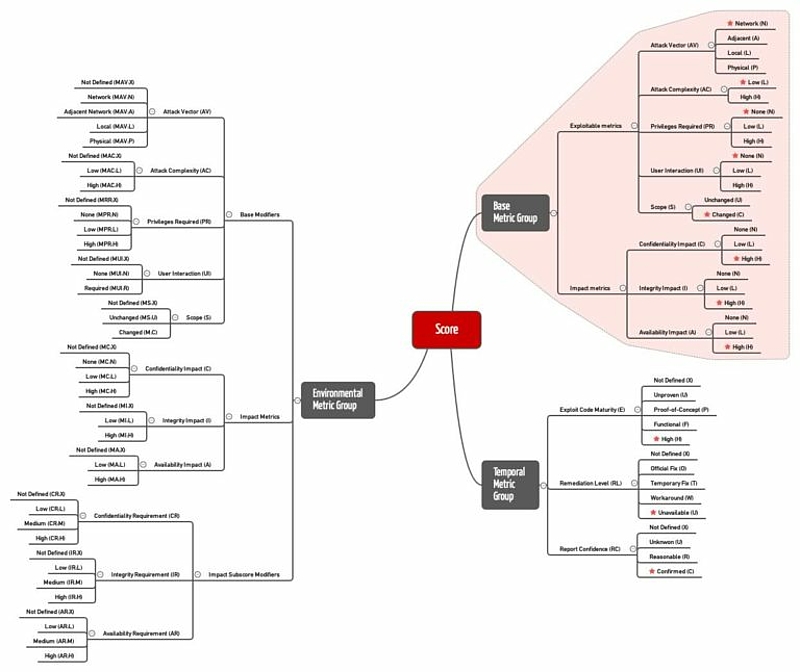

The Common Vulnerability Scoring System involves several types of scores that may influence each other:

- base metric scores

- temporal metric scores

- environmental metric scores

When a new vulnerability is detected, usually only the base score metrics are disclosed (shown in Fig. 3 against a red background).

Download the mind map as a PDF file:download

The scores can adopt values from 0 to 10 (highest vulnerability, highest severity).

a) CVSS base score

The CVSS base score is calculated from the base score metrics. These metrics include:

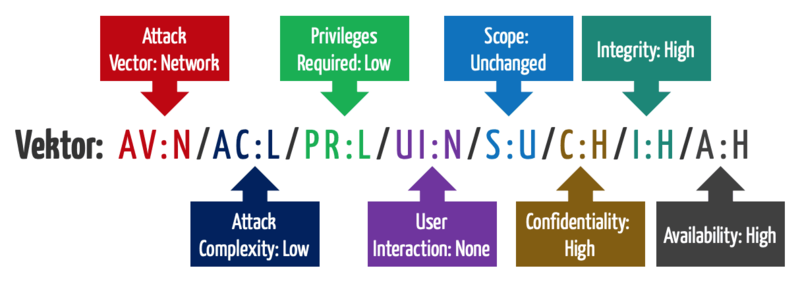

- Attack Vector: this metric indicates how ‘close’ an attacker needs to be to the object. Is physical access needed at one end (AV:P)? Or can the object at the other end be attacked via the network?

- Attack Complexity: how easily can the attacker reach their target? Is it within their control?

- Privileges Required: does the attacker need privileges (authorisation) before they can carry out their attack? If this is the case, the score is lower, otherwise, it is higher.

- User Interaction: must a user do anything first before the attacker reaches their target? If the user, for example, has to click on a link first, the value would be ‘required’ (UI:R).

- Scope: the scope describes whether the effects of an attack ‘only’ affect the vulnerable components or other components. In the last case (‘changed’ S:C), the scope score increases the base score if the latter has not already reached the maximum value of 10.

- Confidentiality Impact: this metric indicates to what extent the attack affects confidentiality. A ‘high’ (C:H) value means that confidentiality has been totally lost.

- Integrity Impact: in the same way, this metric describes the influence on the integrity of the data. If, for example, the attackers were able to modify all files, the impact would be set to ‘high’ (I:H).

- Availability Impact: this measure is also very similar to the other impact metrics. If the attacker succeeds or were able to succeed in denying the availability of the components so that they can no longer be accessed, the maximum value ‘high’ (A:H) would be reached.

Calculation is based on a formula which you can see here as an example for the attack vector ‘AV:N/AC:L/PR:L/UI:N/S:U/C:H/I:H/A:H’. Figure 4 explains the meaning of this vector.

b) Temporal score

The temporal score measures how well the vulnerability can be exploited at the present time, e.g. because there are already finished exploit kits that would increase the score. Existing patches, on the other hand, would decrease it.

- Exploit Code Maturity (E): this metric indicates how easily the vulnerability can be exploited using exploit kits. It is also a measure for the ‘performance and usability’ of this exploit.

- Remediation Level (RL): the remediation level, on the other hand, is more of measure for the performance of the countermeasure, e.g. in the form of a software patch.

- Report Confidence (RC): this metric indicates how certain it is that the vulnerability actually exists. The highest values for the temporal score are achieved when the values are set to ‘confirmed’ or ‘not defined’.

c) Environmental score

At first glance, the environmental metric group contains the most metrics. However, it is ultimately only the already disclosed metrics that are assigned for the specific context of application of a company or system. This means that these values are not established by the NIST, but by the analyst of a specific context, e.g. a hospital with a certain infrastructure and certain requirements regarding the confidentiality, integrity and availability of information and information technology. That is, IT security requirements.

Further information

Read more here on the subject of IT security in healthcare and on IT security management according to AAMI TIR 57.

3. How vulnerability scores and risk management interact

a) The scores do not measure risks

‘CVSS Measures Severity, not Risk’ says the official user guide. This is true for medical devices in another respect:

Rather, the scores measure the probability that a component will be compromised and thus will not behave according to specifications. They neither measure the probability nor the severity of (physical) damage. And so do not measure risk as defined by ISO 14971.

b) The scores help to assess risks

However, the scores provide a metric to be able to assess the probability of damage or system malfunctions better (also see Fig. 1). The adaptation of these metrics by the environmental metric group – that is, the specific context – helps in this assessment.

c) Scores in the post-market phase

The CVSS is particularly significant for manufacturers in the post-market phase: manufacturers must constantly keep an eye on the messages about vulnerabilities and decide whether measures need to be taken. It is precisely in this decision-making process and when prioritising measures that the scores are useful.

Obviously, a product with a vulnerability that can only be exploited by accessing the product does not have the same priority as a product that can be attacked remotely via the network without user input.

Tip

The MDR demands that criteria be established in the PMS plan whereby manufacturers take corrective and preventive measures. The metrics of the CVSS lend themselves to this.

In inspections, auditors and inspectors will select the vulnerabilities with the highest score in order to check whether the manufacturer has detected and eliminated the vulnerability effectively and in good time. Notifying the user and, where applicable, the authorities, of the measures in compliance with the law is part of these inspections.

d) Vulnerabilities in the risk management file

There is no point documenting every vulnerability reported in the ‘risk table’. This would be totally superfluous. However, manufacturers should check the following for each of the above-mentioned vulnerabilities:

- Integrity of the components analysed

The malfunctioning of the affected components has already been analysed in the risk table. Otherwise, it would need to be added. The FMEA extends itself as a risk analysis method. - Accuracy of the estimated probabilities

The probabilities estimated in the risk table agree with the actual events and the CVSS assessment. Otherwise, they are to be corrected and the risks are to be reassessed. - Accuracy of the anticipated malfunction

The malfunctioning of components that may arise due to the vulnerabilities is correctly assessed in the risk table. For example, it may be the case that the manufacturer has already detected that in a cyber attack the components provide adulterated data (integrity problem), but has not considered that the attack may cause a memory leak which causes the whole system to crash (availability problem). This would also need to be added to the risk table and the risks would need to be reassessed.

Careful!

If the vulnerabilities reported could lead to system malfunctions that have not previously been looked into, manufacturers must analyse the effects on patients, users and third parties.

e) Procedure specifications or work instructions

Therefore, it makes sense to work in two steps and, if necessary, with two work instructions or procedure specifications:

- The first contains a description of how to proceed in the event of the above-mentioned analysis. It should demand brief written information on each vulnerability and as to whether the current risk analysis covers everything. If so, the reference to the ‘risk ID’ is enough, if not, information on the change to the risk management file is required. This description is specifically for IT security.

- The second specifies how new or changed risks are to be documented in the risk management file. To a great extent, this specification is not specific to IT security.

4. Conclusion

a) Deductions

Manufacturers of medical devices which contain software or are software must constantly keep an eye on the vulnerability databases (e.g. NIST). To be able to classify the messages published there, it is essential to understand the metrics of the Common Vulnerability Scoring System.

The sometimes thousands of messages a month can only reasonably be monitored automatically.

The Common Vulnerability Scoring System CVSS can only assess how vulnerable a system (e.g. a medical device) is to a limited extent. Here, manufacturers need to take the specific context and so the environmental metric group into account. The values that these metrics adopt are determined, for example, by the specific system design and the clinical context.

b) Tasks

This gives the manufacturers the following tasks:

- To accurately set out the clinical context in the intended purpose and accompanying materials. This is also a requirement of the MDR.

- To deduce the IT security requirements of the device and to design a system architecture that is as inert against cyber attacks as possible. In both cases, the free IT security guideline, which has in the meantime been adopted by the notified bodies, will help.

- Choose components in which the manufacturer eliminates the vulnerabilities as quickly as possible.

- Draw up a post-market surveillance plan for every product which, among others, sets out how the manufacturer reacts to messages subject to the CVSS.

- Draw up and revise an SOP on post-market surveillance (PMS) which, among others, requests or describes these measures. This SOP should contain or refer to the procedure specifications or work instructions mentioned under point 3.e).

- Use an IT system that automatically compiles messages about vulnerabilities. (Or use Post-Market Radar, which also assesses other sources of information and so saves the manufacturer a lot of PMS work).